News

Communication-efficient quantum algorithm for distributed machine learning

Distributed machine learning has attracted great attention as a method to deal with large-scale or distributed data. In distributed machine learning, the required communication between different machines quantified by communication complexity is one of the critical factors in determining an algorithm’s performance. Therefore, developing communication-efficient algorithms is important for distributed machine learning applications when the communication bandwidth is constrained. Recently, quantum algorithms have been developed to accelerate computation in a series of machine learning problems. However, whether quantum algorithms can accelerate communication in distributed machine learning scenarios remains an open question.

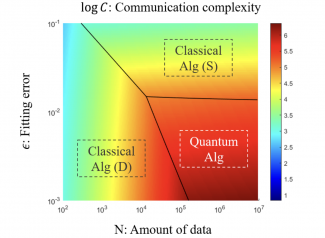

Recently, we designed a quantum counting-based algorithm that realizes two traditional machine learning problems, linear regression and SoftMax regression, with accelerated communication in distributed scenarios. When solving the problems with statistical precision, our quantum algorithm has a square-root reduction of communication complexity compared to classical theoretical limits. Following previous works designing time-efficient quantum algorithms for regression problems, this work further shows both computation and communication acceleration in traditional machine learning tasks. Our algorithm can also benefit the application scenarios when privacy preservation and data masking are required, as neither of the involved machines can obtain data value of the other machine during the fitting process.

Figure: To solve linear regression problem, quantum counting base algorithm shows advantage in communication complexity when requiring small fitting error () and the amount of data (N) is large, compared with the deterministic (D) and stochastic (S) classical algorithm.